A Brief History of AI

The history of AI is a testament to human ingenuity, perseverance, and the relentless pursuit of creating intelligent machines. As AI continues to evolve, it promises to revolutionize industries, transform human experiences, and raise profound questions about the nature of intelligence and the role of machines in our lives.

The history of artificial intelligence (AI) is a fascinating journey that spans several decades, marked by significant milestones, breakthroughs, and paradigm shifts. The quest to create intelligent machines that can mimic human cognitive functions and perform complex tasks has captivated the imagination of scientists, researchers, and innovators around the world.

In spite of all the current hype, AI is not a new field of study, but it has its ground in the fifties. If we exclude the pure philosophical reasoning path that goes from the Ancient Greek to Hobbes, Leibniz, and Pascal, AI as we know it has been officially started in 1956 at Dartmouth College, where the most eminent experts gathered to brainstorm on intelligence simulation.

This happened only a few years after Asimov set his own three laws of robotics, but more relevantly after the famous paper published by Turing (1950), where he proposes for the first time the idea of a thinking machine and the more popular Turing test to assess whether such machine shows, in fact, any intelligence.

As soon as the research group at Dartmouth publicly released the contents and ideas arisen from that summer meeting, a flow of government funding was reserved for the study of creating a nonbiological intelligence.

The Birth of AI

The origins of AI can be traced back to the mid-20th century, with the seminal work of Alan Turing, who proposed the concept of a “universal machine” capable of performing any computational task. Turing’s theoretical framework laid the groundwork for the development of intelligent machines and set the stage for subsequent advancements in AI research.

Early Foundations

In the 1950s and 1960s, AI pioneers such as John McCarthy, Marvin Minsky, and Herbert Simon made significant contributions to the field, introducing fundamental concepts and laying the theoretical foundations of AI. McCarthy coined the term “artificial intelligence” and organized the Dartmouth Conference in 1956, which is widely regarded as the birth of AI as a distinct field of study.

Symbolic AI and Expert Systems

During the 1960s and 1970s, AI research focused on symbolic reasoning and problem-solving using logical rules and symbolic representations. This period saw the development of expert systems, which aimed to capture human expertise in specific domains and make it accessible through computer programs. Notable examples include MYCIN, an expert system for diagnosing infectious diseases, and DENDRAL, a system for chemical analysis.

AI Winter and Resurgence

The 1980s and 1990s were characterized by alternating periods of optimism and skepticism towards AI. The so-called “AI winter” referred to phases of reduced funding and waning interest in AI research due to unmet expectations and overhyped promises. However, advancements in machine learning, neural networks, and computational power led to a resurgence of interest in AI, laying the groundwork for future breakthroughs.

The phantom menace

At that time, AI seemed to be easily reachable, but it turned out that was not the case. At the end of the sixties, researchers realized that AI was indeed a tough field to manage, and the initial spark that brought the funding started dissipating.

This phenomenon, which characterized AI along its all history, is commonly known as “AI effect”, and is made of two parts:

- The constant promise of a real AI coming in the following decade;

- The discounting of the AI behavior after it mastered a certain problem, redefining continuously what intelligence means.

In the United States, the reason for DARPA to fund AI research was mainly due to the idea of creating a perfect machine translator, but two consecutive events wrecked that proposal, beginning what it is going to be called later on the first AI winter.

In fact, the Automatic Language Processing Advisory Committee (ALPAC) report in the US in 1966, followed by the “Lighthill report” (1973), assessed the feasibility of AI given the current developments and concluded negatively about the possibility of creating a machine that could learn or be considered intelligent.

These two reports, jointly with the limited data available to feed the algorithms, as well as the scarce computational power of the engines of that period, made the field collapsing and AI fell into disgrace for the entire decade.

Attack of the (expert) clones

In the eighties, though, a new wave of funding in UK and Japan was motivated by the introduction of “expert systems”, which basically were examples of narrow AI as defined in previous articles.

These programs were, in fact, able to simulate skills of human experts in specific domains, but this was enough to stimulate a new funding trend. The most active player during those years was the Japanese government, and its rush to create the fifth generation computer indirectly forced US and UK to reinstate the funding for research on AI.

This golden age did not last long, though, and when the funding goals were not met, a new crisis began. In 1987, personal computers became more powerful than Lisp Machine, which was the product of years of research in AI. This ratified the start of the second AI winter, with the DARPA taking a clear position against AI and further funding.

Machine Learning and Neural Networks

The late 20th century witnessed significant progress in machine learning algorithms, particularly with the development of neural networks and deep learning models. Researchers such as Geoffrey Hinton, Yann LeCun, and Yoshua Bengio made pioneering contributions to the field, propelling machine learning to the forefront of AI research and application.

The return of the Jed(AI)

Luckily enough, in 1993 this period ended with the MIT Cog project to build a humanoid robot, and with the Dynamic Analysis and Replanning Tool (DART) — that paid back the US government of the entire funding since 1950 — and when in 1997 DeepBlue defeated Kasparov at chess, it was clear that AI was back to the top.

In the last two decades, much has been done in academic research, but AI has been only recently recognized as a paradigm shift. There are of course a series of causes that might bring us to understand why we are investing so much into AI nowadays, but there is a specific event we think it is responsible for the last five-years trend.

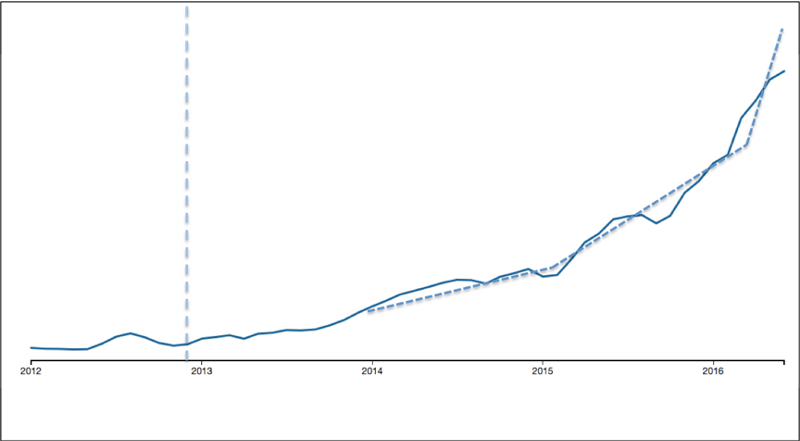

If we look at the following figure, we notice that regardless all the developments achieved, AI was not widely recognized until the end of 2012. The figure has been indeed created using CBInsights Trends, which basically plots the trends for specific words or themes (in this case, Artificial Intelligence and Machine Learning).

More in details, I drew a line on a specific date I thought to be the real trigger of this new AI optimistic wave, i.e., Dec. 4th 2012. That Tuesday, a group of researchers presented at the Neural Information Processing Systems (NIPS) conference detailed information about their convolutional neural networks that granted them the first place in the ImageNet Classification competition few weeks before (Krizhevsky et al., 2012).

Their work improved the classification algorithm from 72% to 85% and set the use of neural networks as fundamental for artificial intelligence.

In less than two years, advancements in the field brought classification in the ImageNet contest to reach an accuracy of 96%, slightly higher than the human one (about 95%).

The picture shows also three major growth trends in AI development (the broken dotted line), outlined by three major events:

- The 3-years-old DeepMind being acquired by Google in Jan. 2014;

- The open letter of the Future of Life Institute signed by more than 8,000 people and the study on reinforcement learning released by Deepmind (Mnih et al., 2015) in Feb. 2015;

- The paper published in Nature on Jan. 2016 by DeepMind scientists on neural networks (Silver et al., 2016) followed by the impressive victory of AlphaGo over Lee Sedol in March 2016 (followed by a list of other impressive achievements — check out the article of Ed Newton-Rex).

AI in the 21st Century

The 21st century has seen an unprecedented acceleration in AI research and deployment, driven by advancements in data analytics, cloud computing, and the availability of large-scale datasets. Breakthroughs in natural language processing, computer vision, and reinforcement learning have led to transformative applications in areas such as autonomous vehicles, healthcare, finance, and more.

A new hope

AI is intrinsically highly dependent on funding because it is a long-term research field that requires an immeasurable amount of effort and resources to be fully depleted.

There are then raising concerns that we might currently live the next peak phase (Dhar, 2016), but also that the thrill is destined to stop soon.

However, as many others, I believe that this new era is different for three main reasons:

- (Big) data, because we finally have the bulk of data needed to feed the algorithms;

- The technological progress, because the storage ability, computational power, algorithm understanding, better and greater bandwidth, and lower technology costs allowed us to actually make the model digesting the information they needed;

- The resources democratization and efficient allocation introduced by Uber and Airbnb business models, which is reflected in cloud services (i.e., Amazon Web Services) and parallel computing operated by GPUs.

References

- Dhar, V. (2016). “The Future of Artificial Intelligence”. Big Data, 4(1): 5–9.

- Krizhevsky, A., Sutskever, I., Hinton, G.E. (2012). “Imagenet classification with deep convolutional neural networks”. Advances in neural information processing systems: 1097–1105.

- Lighthill, J. (1973). “Artificial Intelligence: A General Survey”. In Artificial Intelligence: a paper symposium, Science Research Council.

- Mnih, V., et al. (2015). “Human-level control through deep reinforcement learning”. Nature, 518: 529–533.

- Silver, D., et al. (2016). “Mastering the game of Go with deep neural networks and tree search”. Nature, 529: 484–489.

- Turing, A. M. (1950). “Computing Machinery and Intelligence”. Mind, 49: 433–460.

Ethical and Societal Implications

As AI technologies become increasingly integrated into everyday life, concerns about ethics, bias, privacy, and the societal impact of AI have come to the forefront. Efforts to ensure responsible AI development, transparency, and ethical use of AI are ongoing, reflecting the need for thoughtful consideration of the implications of AI on society.

The evolution of artificial intelligence (AI) has been a remarkable journey, marked by significant advancements, paradigm shifts, and transformative applications across various domains. Over time, AI has undergone rapid development, driven by technological innovation, increased computational power, and the growing availability of large-scale datasets. As AI continues to evolve, it promises to revolutionize industries, transform human experiences, and raise profound questions about the nature of intelligence and the role of machines in our lives. The irony is that We are the ones who will build and are building.